A 2-staged ML-based Detection of COVID-19 and Pneumonia

Authors: Sahil Khose, Richa Kulkarni, Shubham Kulkarni, James Wellington, Sneha Talwalkar

Introduction

The initial cases of COVID-19 were detected in Wuhan, China in late 2019. Though several precautionary steps were taken to contain the spread of the disease, by the end of spring 2020, the virus was already reported in most countries and the World Health Organization (WHO) declared the new virus a pandemic. [9]

The Polymerase Chain Reaction (PCR) test, which is the standard test for detecting COVID-19, detects the presence of infection antibodies. However, this test is time-consuming, requires high precision, and has a minor possibility of false negatives. [10] Furthermore, many countries lack the infrastructure required for conducting these tests on a large scale. To overcome such issues, chest X-rays are often used as an alternative method to the PCR test.

In such a scenario, Machine Learning solutions can play a crucial role. Many recent works have encouraged development based on deep learning technologies that can help human experts make better and faster decisions about their patient’s health. To accelerate detection of the presence of COVID-19 disease through X-ray images, our project aims to provide a new, completely automated diagnostic platform using convolutional neural networks (CNN) that is able to:

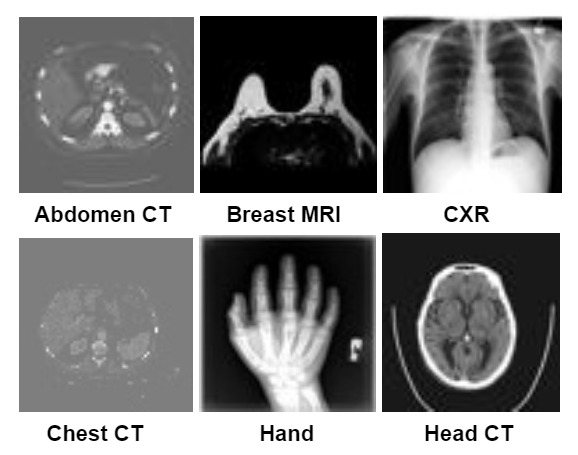

Stage 1. Segregate various types of medical reports like Chest CT, Chest X-Ray, Abdomen CT and then

Stage 2. Use the recognized Chest X-Ray reports for a downstream task of detecting COVID-19 or Viral Pneumonia.

We do this using 2 medical imaging datasets mentioned below.

Stage 1. Medical MNIST

- Contains 58954 total images

- Task: Multi-class Classification

- 6 classes: AbdomenCT, BreastMRI, CXR, ChestCT, Hand, HeadCT

Stage 2. Covid-19 Image Dataset

- Contains 317 total images (train and test)

- Task: Multi-class Classification

- 3 classes: COVID-19, Viral Pneumonia, Normal Chest X-Rays

Problem Definition

Medical imaging plays a crucial role in the diagnosis and treatment of various diseases, and computer vision techniques have shown promising results in analyzing and interpreting medical images. With the outbreak of COVID-19, there has been a surge in the number of Chest CT and Chest X-Ray scans, making it challenging for radiologists to efficiently triage and diagnose patients. Therefore, there is a pressing need for an automated solution that can segregate medical reports based on their type and detect COVID-19 or Viral Pneumonia from Chest X-Ray reports.

In their work, Gao proposed a deep CNN to distinguish between Covid-19 Pneumonia and non-Covid-19 Pneumonia based on chest x-rays.[6] Both Hamza et. al. [7] and Kumar et. al [8] propose the use of pre-trained deep CNN models such as ResNet50 and InceptionV3 and enhance their capabilities through transfer learning for detecting Covid-19 using chest x-rays. In this project, we aim to develop a completely automated system that can segregate different types of medical reports and use the recognized Chest X-Ray reports for the downstream task of COVID-19 or Viral Pneumonia detection. The proposed solution has the potential to provide fast and accurate diagnoses, reducing the burden on radiologists and improving the overall efficiency of the healthcare system due to its high scalability.

Data Collection

The Medical MNIST and Covid-19 datasets are two popular medical image datasets collected from Kaggle. The Medical MNIST dataset is often used for classification tasks and is a good benchmark dataset for evaluating machine learning algorithms. On the other hand, the Covid 19 dataset consists of chest X-ray images of patients with Covid-19, normal patients, and patients with pneumonia. This dataset has been widely used for research on Covid-19 diagnosis and classification using machine learning techniques.

Data Cleaning

As both the datasets were taken from Kaggle and are standard datasets, there was no need for cleaning after our analysis. The images for COVID-19 were of different dimensions hence we resize all of them to (224, 224) which has been the standard image resolution for most of the similar medical imaging problems.

Methods

Supervised Methods

As the image dataset is high dimensional, we are primarily going to focus on using Convolutional Neural Networks to train our models for both the classification tasks.

Stage 1. Medical MNIST

- We use a custom-built CNN model with 2 Conv layers and 2 Linear Layers for our Medical MNIST task. We do this because the image dimension of this dataset is small (64, 64, 3).

- We use 32 and 16 channels for the Conv layers followed by a flatten and Dropout of 0.2.

- We use Maxpool after each conv layer with stride (2, 2) to reduce the dimensionality of the image.

- Linear layers have 64 nodes each with ReLU activation function in between.

- We use batch size of 128, train the model for 10 epochs with a learning rate of 1e-3 using the Adam optimizer on the Cross Entropy Loss.

Stage 2. COVID-19

- EfficientNet-b0

- We use ImageNet pre-trained EfficientNet-b0 [5] model to benchmark the COVID-19 dataset.

- ImageNet is a standard computer vision dataset that has 1000 classes and over 1 million images for the entire dataset.

- Using a pre-trained model for downstream applications is called Transfer Learning. We use a pre-trained model because the images in this dataset are of higher dimensions and we found it harder to optimize as compared to Medical MNIST task.

- We use batch size of 8, train the model for 40 epochs with a learning rate of 1e-4 using the Adam optimizer on the Cross Entropy Loss.

- The architecture (compound scaling technique) and performance of the EfficientNet models is visualized below:

- ResNet-18

- We also used a pre-trained ResNet-18 [4] model for the COVID-19 dataset.

- ResNet-18 is a popular convolutional neural network architecture that has been shown to perform well on image classification tasks.

- It has 18 layers and uses residual connections to improve training stability and performance.

- We use the pre-trained model that allowed us to leverage the knowledge that the model has already learned from large amounts of data from the ImageNet dataset.

- We use batch size of 8, train the model for 40 epochs with a learning rate of 1e-4 using the Adam optimizer on the Cross Entropy Loss.

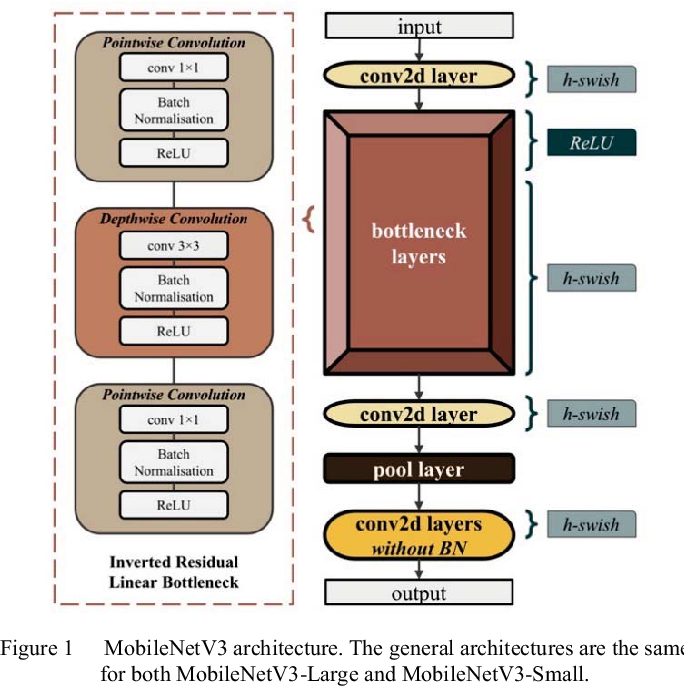

- MobileNet-V3-Small

- MobileNetV3 [3] small is a lightweight neural network architecture optimized for mobile devices with limited computational resources.

- It’s a combination of depthwise separable convolutions, linear bottlenecks, and a hard sigmoid activation function that can achieve high accuracy on image classification and other tasks while using fewer parameters and computational resources.

- We use batch size of 8, train the model for 40 epochs with a learning rate of 1e-4 using the Adam optimizer on the Cross Entropy Loss.

We choose the above models, specifically due to their exponential difference in parameters.

- ResNet18 has twice as much parameters than EfficientNet-b0

- EfficientNet-b0 has twice as much parameters as MobileNet-v3-small

- MobileNet-v3 was a successor to ResNet-18 and hence was extremely optimized to get tremendous performance on very less number of parameters. This can be observed in the top-1% and top-5% ImageNet accuracies in the table below.

- EfficientNet-b0 is the most advanced amongst the three models, hence has considerably better accuracy on ImageNet than either of them.

| Model | top-1 % | top-5 % | Parameters |

|---|---|---|---|

| ResNet18 | 69.758 | 89.078 | 11.7M |

| EfficientNet_B0 | 77.692 | 93.532 | 5.3M |

| MobileNet_V3_Small | 67.668 | 87.402 | 2.5M |

Oversampling and Augmentations

- Oversampling

- Oversampling is a technique used to address class imbalance in datasets, where some classes have significantly fewer samples than others.

- This can lead to poor performance of the model on the underrepresented classes, as they do not have enough examples to learn from.

- COVID-19 dataset has a class imbalance as shown in the class distribution visualizations in the next section. We use oversampling methods to overcome this and see a significant improvement in performance.

- Augmentations

- As compared to Medical MNIST dataset which has around 60,000 images, COVID-19 dataset only has a 323 total images, which is significantly small for a image classification task using CNNs.

- Image augmentation refers to a set of techniques used to artificially increase the size of a training dataset by creating new, slightly modified versions of the existing images.

- Some common image augmentation techniques include flipping, rotating, cropping, zooming, shifting, and adding noise or blur.

- The primary goal of image augmentation is to increase the diversity and variability of the training dataset, which can improve the model’s generalization performance.

- In our model we use horizontal flip (p=0.1), random rotation, and color jitter (brightness=0.2, contrast=0.2) and observe a huge boost in performance

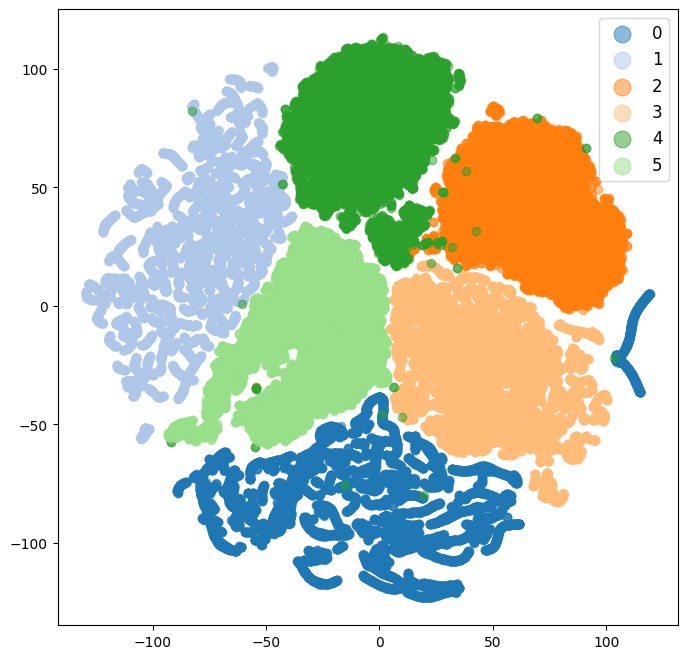

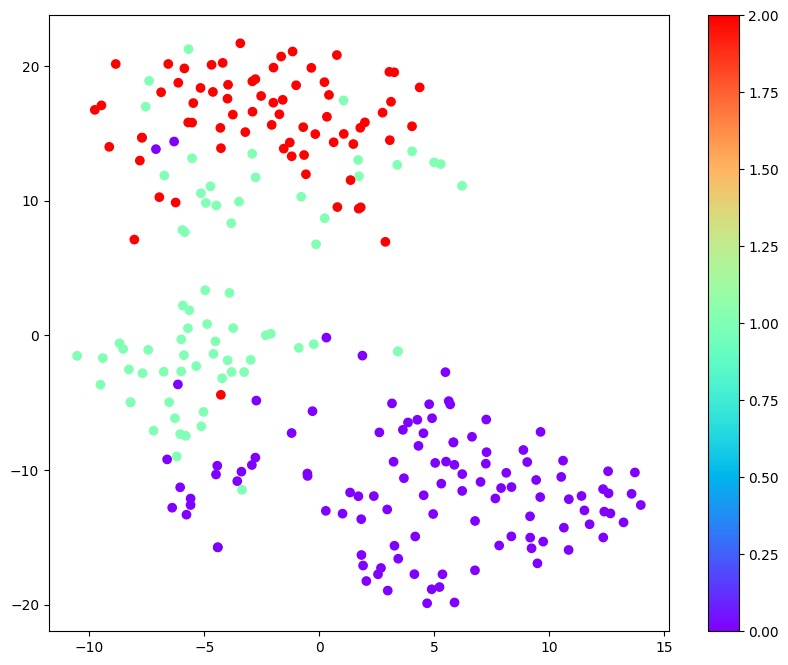

Unsupervised Methods

- We use TSNE to visualize the raw pixels of the Medical MNIST dataset and COVID-19 dataset.

- After training our best models for both the datasets, we also visualize the embedding space and see the improvement in clusters as compared to the raw pixel visualizations.

- The following section shows the class distribution visuals and the TSNE visualization.

Visualizations

Stage 1: Medical MNIST

Class Distribution

We visualized the class distribution of the dataset using a bar graph. The bar graph showed that the dataset was well-balanced, with each class containing approx 10,000 images.

Here is a visualization of the class distribution:

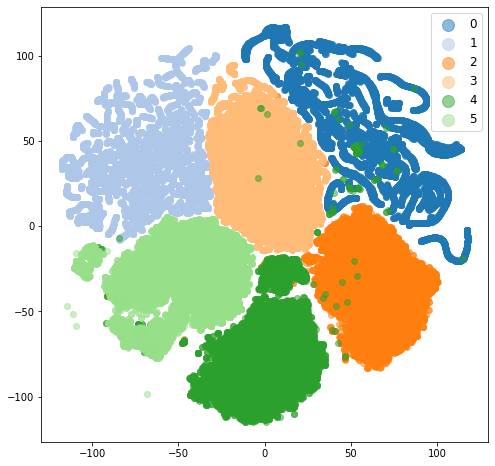

t-SNE Clusters on Raw Pixels

We used t-SNE (t-Distributed Stochastic Neighbor Embedding) to visualize the images in 2D space. We used the scikit-learn library in Python to perform the t-SNE analysis.

The t-SNE visualization showed that the images in the dataset were well-clustered by class. Each of the six classes formed distinct clusters in the 2D space. The CXR and Hand classes were well separated while the Abdomen CT and Chest CT had a lot of overlap.

Here is a visualization of the t-SNE clusters:

t-SNE Clusters of our trained CNN embeddings

Here is a visualization of the t-SNE clusters:

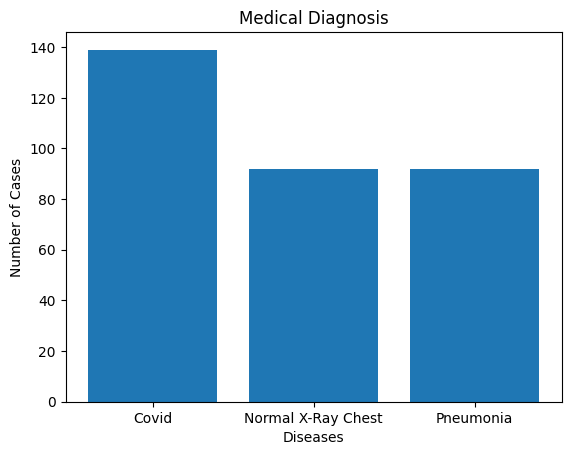

Stage 2: COVID-19

Class Distribution

We visualized the class distribution of the dataset using a bar graph. The bar graph showed that the dataset has more images of the Covid class while Normal X-ray Chest and Pneumonia are equal but less in number.

Here is a visualization of the class distribution:

t-SNE Clusters on Raw Pixels

The three clusters even though seem separated, are intermixed to an extent that they are not easily separable. (0: COVID, 1: Normal, 2: Viral Pneumonia). Viral Pneumonia cluster has a massive overlap with Normal datapoints and a small overlap with COVID datapoints. There is a small overlap between COVID and normal datapoints. After we train our optimal model, we can see perfect separation of all the datapoints.

Here is a visualization of the t-SNE clusters:

t-SNE Clusters of our trained CNN embeddings

We visualize the embeddings of MobileNet-v3-small that is our best model. Here is a visualization of the t-SNE clusters:

Results

We report 2 supervised baselines, one for each of the dataset and report their validation performance using the following supervised metrics:

- Accuracy

- Precision and Recall

- F1 Score

Validation Results

| Model | Parameters | Acc | F1 | P | R |

|---|---|---|---|---|---|

| ResNet18 | 11.7M | 93.94% | 93.88% | 94.95% | 93.94% |

| EfficientNet_B0 | 5.3M | 98.48% | 98.48% | 98.56% | 98.48% |

| MobileNet_V3_Small | 2.5M | 100.00% | 100.00% | 100.00% | 100.00% |

Training Results

| Model | Parameters | Acc | F1 | P | R |

|---|---|---|---|---|---|

| ResNet18 | 11.7M | 99.20% | 99.20% | 99.22% | 99.20% |

| EfficientNet_B0 | 5.3M | 100.00% | 100.00% | 100.00% | 100.00% |

| MobileNet_V3_Small | 2.5M | 100.00% | 100.00% | 100.00% | 100.00% |

Ablations

EfficientNet-b0 Ablations validation results

| Model | Acc | F1 | P | R |

|---|---|---|---|---|

| baseline | 77.27% | 77.34% | 78.93% | 77.27% |

| baseline + sampling | 86.36% | 85.64% | 90.60% | 86.36% |

| baseline + augmentation | 59.09% | 57.39% | 80.25% | 59.09% |

| final | 98.48% | 98.48% | 98.56% | 98.48% |

EfficientNet-b0 Ablations training results

| Model | Acc | F1 | P | R |

|---|---|---|---|---|

| baseline | 99.20% | 99.21% | 99.23% | 99.20% |

| baseline + sampling | 100.00% | 100.00% | 100.00% | 100.00% |

| baseline + augmentation | 69.72% | 66.78% | 85.10% | 69.72% |

| final | 100.00% | 100.00% | 100.00% | 100.00% |

Final Results

| Dataset | Accuracy | F1 Score | Precision | Recall |

|---|---|---|---|---|

| Medical MNIST | 99.87% | 99.87% | 99.87% | 99.87% |

| COVID-19 | 100.00% | 100.00% | 100.00% | 100.00% |

Result Analysis

Medical MNIST

- As we were expecting, Medical MNIST was an easy dataset to benchmark our CNN model to get perfect metrics.

- This is due to the simplicity of the dataset, low dimensionality and perfect class balance.

- We could also infer this from the raw pixel TSNE visualization that the datapoints were easily separable.

- The change in TSNE visualization from raw pixel to trained CNN embeddings was hence very minimal, as they were already clustered pretty well.

COVID-19

- If we observe the training results of all the three models, we can see that the model is overfitting on the training dataset.

- Hence to overcome overfitting, we have to add regularization.

- Reduction in the number of parameters of the model is one effective way to regularize.

- We can observe improvement in performance as we reduce the number of parameters from 11.7M to 5.3M to 2.5M from ResNet-18 to EfficientNet-b0 to MobileNet-V3-Small.

- This aligns with our argument that the model was overfitting, and regularization (reduction in parameters in this scenario) was going to be helpful for the validation performance.

COVID-19 Ablations

- We started our baseline using EfficientNet-b0 and got poor performance on the validation set. There is a huge difference in training performance and validation performance. Clearly indicating massive overfitting.

- There is also a significant class imbalance which are affecting the baseline results.

- Additionally, the number of datapoints are extremely low for classical CNN based methods.

- To counter the class imbalance issue, we resort the oversampling during dataloading, which ensures that equal number of datapoints from each of the classes are sampled during batch formation. This resulted in 8% increase in the F1 score from the baseline.

- To counter the low number of datapoints issue, we experiment with image augmentation techniques. Although we get worse validation performance, the difference in train and validation performance reduces immensely, i.e. overfitting reduces.

- When we deploy both augmentation and sampling together, we observe a 21% increase in F1 performance from the baseline model, resulting in the best performing model.

Contributions

- Sahil Khose: Supervised Training codebase, training, and evaluation metrics of both Medical MNIST, and COVID-19; EfficientNet-b0, ResNet-18, MobileNet-v3 model training and ablations without augmentations and without sampling; analysis of the results and ablations; GitHub Final Report.

- Richa Kulkarni: Medical MNIST dataloader codebase (2 methods); Data Augmentations for enhancing the performance; Oversampling techniques to overcome Class Imbalance.

- Shubham Kulkarni: Class Distribution Visualization and analysis of Medical MNIST and COVID-19 datasets; TSNE visualization and analysis for Medical MNIST and COVID-19 datasets on raw image pixels; GitHub Final Report; Embedding visualization for trained custom CNN on Medical MNIST and trained MobileNet-v3-small on COVID-19.

- James Wellington: Unsupervised Training codebase (2 methods); Proposal Presentaion, Proposal Video, Final Presentaion, and Final Presentation Video.

- Sneha Talwalkar: Report review, Gantt chart update, used pre-trained models ResNet-18 and EfficientNet-b0, compared model accuracy and analyzed results by changing epochs and batchsize, updated final report.

Team8_Project tracker_7641 ML.xlsx

References

[1] Simonyan, Karen and Andrew Zisserman. “Very Deep Convolutional Networks for Large-Scale Image Recognition.” CoRR abs/1409.1556 (2014): n. pag.

[2] Szegedy, C. et al (2015, December 11). “Rethinking the inception architecture for computer vision” arXiv.org. Retrieved February 23, 2023, from https://arxiv.org/abs/1512.00567

[3] Howard, Andrew G. et al, “MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications”, 2017

[4] Kaiming He et al, “Deep Residual Learning for Image Recognition”, 2015

[5] Mingxing Tan and Quoc V. Le, “EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks”, 2019

[6] Gao, Terry. (2020). Chest X-ray image analysis and classification for COVID-19 pneumonia detection using Deep CNN. 10.21203/rs.3.rs-64537/v2.

[7] Hamza, Ameer, Muhammad Attique Khan, Majed Alhaisoni, Abdullah Al Hejaili, Khalid Adel Shaban, Shtwai Alsubai, Areej Alasiry, and Mehrez Marzougui. 2023. “D2BOF-COVIDNet: A Framework of Deep Bayesian Optimization and Fusion-Assisted Optimal Deep Features for COVID-19 Classification Using Chest X-ray and MRI Scans” Diagnostics 13, no. 1: 101. https://doi.org/10.3390/diagnostics13010101

[8] Kumar, S., Mallik, A. COVID-19 Detection from Chest X-rays Using Trained Output Based Transfer Learning Approach. Neural Process Lett (2022). https://doi.org/10.1007/s11063-022-11060-9

[9] Eurosurveillance editorial team. Note from the editors: World Health Organization declares novel coronavirus (2019-nCoV) sixth public health emergency of international concern. Euro Surveill. 2020 Feb;25(5):200131e. doi: 10.2807/1560-7917.ES.2020.25.5.200131e. Epub 2020 Jan 31. PMID: 32019636; PMCID: PMC7014669.

[10] Axell-House DB, Lavingia R, Rafferty M, Clark E, Amirian ES, Chiao EY. The estimation of diagnostic accuracy of tests for COVID-19: A scoping review. J Infect. 2020 Nov;81(5):681-697. doi: 10.1016/j.jinf.2020.08.043. Epub 2020 Aug 31. PMID: 32882315; PMCID: PMC7457918.